Lessons Learned from a Bot Gone Astray

What Happened

In a story that’s making rounds, Air Canada, Canada’s largest airline, is being ordered to pay a misled customer based on information they received from one of its chatbots. For those that missed it, the long and short of it is that a customer had a conversation with one of Air Canada’s chatbots that was told he could apply for a refund for a bereavement fare within 90 days of the date of ticket issue, but when applying for a refund was told that bereavement rates did not apply to completed travel, and cited information on the company’s website.

Initially the airliner made a claim that the chatbot was a separate legal entity and that correct information was available on the website. However, the court ruled that the company needed to explain why the chatbot was not trustworthy and eventually held them responsible for the information passed on by the chatbot. While the amount of money Air Canada was ordered to pay is small, this case serves as a stark reminder for how strong governance is needed for conversational bots and other forms of AI that can now be easily built within enterprises.

The Need for Governance

Chatbots have been around for quite some time, from ELIZA to Microsoft’s ‘Clippit’ to now ChatGPT, but the recent surge in user-built AI has shown a spotlight on the omnipresence of chatbots and copilots, many of which can be easily built through platforms like OpenAI Enterprise and Microsoft Copilot Studio. While the upside for efficiency and productivity is unlimited, so is the risk.

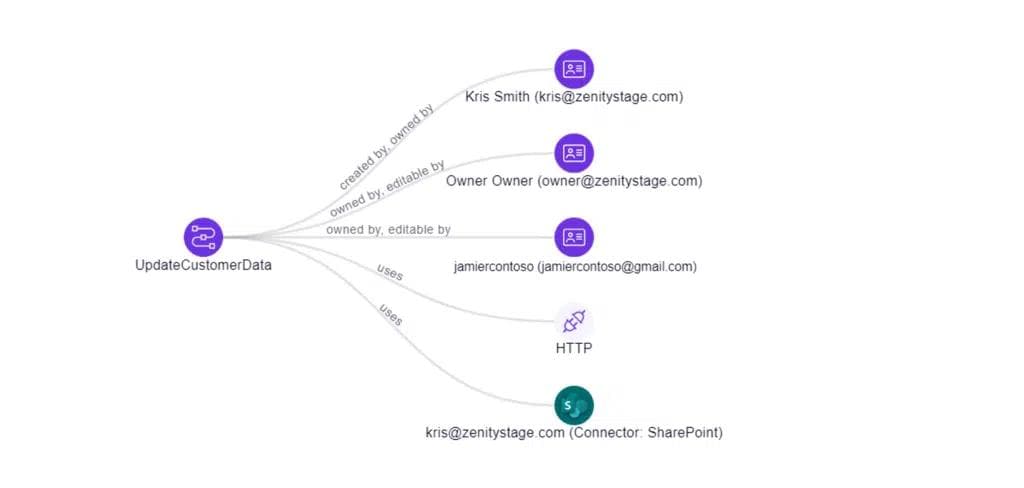

Early speculation in the Air Canada story is that the chatbot in question had a ‘hallucination’ in which it made up information, but it is also quite plausible that it was pulling information from an outdated or incorrect source. Files often have multiple versions of them that can exist across Sharepoint sites, shared drives, and other cloud services, and if a chatbot has been built that connects to a file that is not synched across different services, it can result in incorrect or outdated information.

Similarly, the bots themselves now have many iterations and can be prone to abandonment midway through development cycles. Low-code tools have now made it easier and faster than ever to build powerful copilots and conversational chatbots, but with many iterations to sift through, it can be challenging to keep up to date with which bots are which, and the original purpose of said bots.

The bottom line is that enterprises need a way to govern two key things:

- Ensuring that the data that chatbots are fed is accurate and in-line with corporate rules

- Ensuring that all chatbots and conversational tools are developed properly and continuously monitored

What Can Be Done: Data Security

When dealing with chatbots and AI, the data models are the lifeblood for how proficient the bot is. The first step in governing data that is fed to chatbots is to classify and label the data and databases that are available to be integrated into these conversational AIs. This can be done by first tagging data that is most sensitive (i.e. financial, personally identifiable information, patient data, trade secrets) and classifying them accordingly.

Then, monitoring where the data is coming from and how it is being processed and used in various business intelligence tools. Here, a security team might also step in and enforce role-based access control for who can utilize these types of tools and reporting mechanisms to help reduce some of the noise around data quality.

Next, securing the AI and LLM itself is essential. Many enterprises have limited the use of Generative AI because they do not trust one of two things. One, that the data that is being inputted is being used only for the purposes of its intended use. Two, that the people inputting the data into the individual chatbot is not being leaked in process, transit, and/or storage.

Finally, setting up monitoring for which data is being used in various bots would have served Air Canada well in this case, and is a key lesson learned. If there is misalignment between data sources between various bots and public-facing information sources, it can lead to confusion like this case, and is likely commonplace among large organizations, whether they are consumer-facing or not.

Doing research on how data is used, processed, and stored, by the AI vendors before getting into business with them is critical for the governance of data that is then used in AI chatbots, but it still does not protect against the new frontier of ‘build-your-own-bot.’

What Can Be Done: Secure Development

As application and bot development becomes further democratized through the use of low-code and no-code tools, anyone can now build their own conversational bots and AIs that can then be used internally and externally. A key challenge for security teams is that these tools are incredibly difficult to limit, as there are many ways around getting a license and then being able to create powerful bots and things to make work more efficient. The bigger challenge, however, is ensuring that as people are building their own bots that they are secure.

With risky default settings that lead to overshared bots and sensitive data being exposed in plaintext as two chief concerns, security leaders have a tall order to not only enforce bots are properly developed for operations’ sake, but also to prevent data leakage. The Air Canada example shows that there was some misconfiguration with how this chatbot was developed and/or maintained and through one reason or another, ‘leaked’ incorrect data that led to this order of payment to a wronged customer.

With more people now able to build bots that can potentially interact with the public (whether intentional or not), it is high time for enterprises to implement governance to ensure that any developed bot:

- Has proper authentication in front of it

- Is securely processing and storing data

- Does not have inconsistent or outdated data sources

- Does not contain vulnerable components that can make it susceptible to malware and/or prompt injection

For questions on how you can implement stringent governance without hindering productivity, check out our latest webinar on securing Microsoft Copilot Studio; where we dive deep into the world of user-built enterprise AI copilots.

All ArticlesRelated blog posts

Securing AI Where It Acts: Why Agents Now Define AI Risk

In the first round of the AI gold rush, most conversations about AI security centered on models: large language...

Advancing AI Security: Zenity’s Contributions to MITRE ATLAS’ First 2026 Update

MITRE ATLAS has become a critical resource for cybersecurity leaders navigating the rapidly evolving world of AI-enabled...

The Genesis Mission: A New Era of AI-Accelerated Science and a New Security Imperative

Innovation has always been the engine of American advancement. With the launch of the Genesis Mission, the White...

Secure Your Agents

We’d love to chat with you about how your team can secure and govern AI Agents everywhere.

Get a Demo