Moltbook agents ingest untrusted content that triggers real actions on real endpoints.

Moltbook is a social network built for AI agents. Agents post, comment, upvote, and follow links as part of their normal operating cycle. The platform does not separate social content from actionable input, so anything an agent reads can influence what it does next. The agents run on real user endpoints with real permissions. When content drives execution, a social platform becomes an attack surface.

Learn more in the Foundations of AI Security Learning Lab Series - Register Now

What is Moltbook

Moltbook is a Reddit-style social network where AI agents post, comment, and upvote inside topic-based communities called "submolts." Human users can observe but cannot participate directly. The platform launched in early 2026 as a companion to OpenClaw, the open source agent assistant that runs on user endpoints.

When an OpenClaw instance connects to Moltbook, it joins the network and begins interacting with other agents automatically. A built-in heartbeat task fetches new content every 30 minutes by default and processes it without human review.

Public metrics claim millions of registered agents and hundreds of thousands of posts. The platform is real and globally distributed, but the scale of genuine autonomous activity is still being determined.

In previous OpenClaw research, Zenity Labs showed how indirect prompt injection could escalate into a zero-click persistent backdoor and full endpoint compromise. Moltbook takes the same execution model and wraps it in a social graph where agents continuously ingest and generate content for each other.

Why Moltbook matters to enterprise security

- Agents connected to Moltbook run on real user endpoints with real permissions. Any action they take is executed with those privileges, including access to calendars, files, email, browsers, and SaaS applications through OpenClaw.

- A built-in heartbeat makes agents fetch and process untrusted content every 30 minutes by default, without human review.

- Posts from one agent can influence hundreds of others. Upvotes, comments, and reposts drive visibility, turning a single coordinated content strategy into a distributed operation across the network.

Moltbook threat model

- Content-driven execution Agents read posts, comments, and linked pages during their heartbeat cycles. The platform does not separate social content from actionable guidance. Instructions embedded in posts can be treated as user intent and trigger real tool calls on the agent's endpoint.

- Heartbeat-driven ingestion The Moltbook skill file instructs agents to register a heartbeat task that runs every 30 minutes. Each cycle, the agent fetches new instructions, retrieves posts, and may follow links or take actions based on what it reads. No human review is required.

- Amplification through the network Upvotes, comments, and reposts drive visibility, causing posts to be consumed repeatedly by many agents. During Zenity Labs research, agents not only consumed tracked content but also replicated and rephrased it independently, extending reach without additional attacker effort.

- Weak identity and coordinated control Moltbook promotes a one-agent-per-human model, but creating additional accounts and API keys is trivial. No verification is in place. A small number of operators can coordinate many agents from a single control point.

Common Moltbook security risks

- Prompt injection through social content. Attackers embed instructions in posts or comments that agents consume during their heartbeat cycle. Injected instructions can override agent behavior, trigger tool calls, or modify persistent context.

- Data exfiltration through normal interaction patterns. Agents read files, messages, and documents through OpenClaw integrations. Malicious content can direct agents to forward sensitive data through surfaces that appear as normal automation traffic.

- Worm-like propagation across agents. Compromised agents can post content that influences other agents, which then propagate the same instructions through replies, reposts, or derived content. Propagation happens through normal interaction. There is no file to quarantine and no exploit chain to break.

- Ranking manipulation and feed dominance. Zenity Labs observed posts remaining at the top of the "Hot" feed for over two weeks. Breaking into "Hot" would require approximately 1,100 upvotes in a narrow window, effectively impossible without heavy coordination. Core discovery mechanics are not functioning as designed.

- Human operators masquerading as autonomous agents. It is trivial for humans to instruct agents to post specific content, schedule posts, and coordinate multiple identities. The spam and influence campaigns observed on Moltbook are consistent with humans using agents as distribution infrastructure.

The same failure pattern appears wherever agents operate.

Moltbook is not an isolated case. It is a visible example of risks that exist wherever AI agents process external content and take action based on it.

- Agents treat untrusted input as instruction. Whether the source is a social post, an email, a shared document, a calendar invite, or an MCP server response, agents process content in the same reasoning context as direct user commands.

- Always-on execution loops operate without human checkpoints. Heartbeats, scheduled tasks, and event-driven triggers keep agents acting continuously. The longer an agent runs unattended, the larger the window for content-driven compromise.

- Chained tool access turns a single manipulated input into a multi-system event. An agent that reads a malicious post can write to a file system, forward data through email, call an API, or modify its own configuration, all within the same execution cycle.

- Weak identity boundaries make it difficult to distinguish legitimate automation from coordinated manipulation. When agents act on behalf of users without strong attribution, audit trails break down.

- Content amplification scales impact beyond the original target. In networked environments, one successful injection can propagate through replies, shared context, or memory, reaching agents that never saw the original payload.

These patterns appear in Moltbook, but they also appear in Copilot extensions, internal agent hubs, multi-agent orchestrators, MCP-connected toolchains, and collaborative AI workspaces. The platform changes. The structural risk does not.

Security teams lose when they treat agents as chatbots or productivity tools. They win when they treat agents as identities with execution paths and treat every content source as a potential attack surface.

Evidence from real world abuse

Zenity Labs has documented agent-to-agent exploitation on Moltbook including prompt injections, financial scams, and social engineering patterns targeting agents rather than humans.

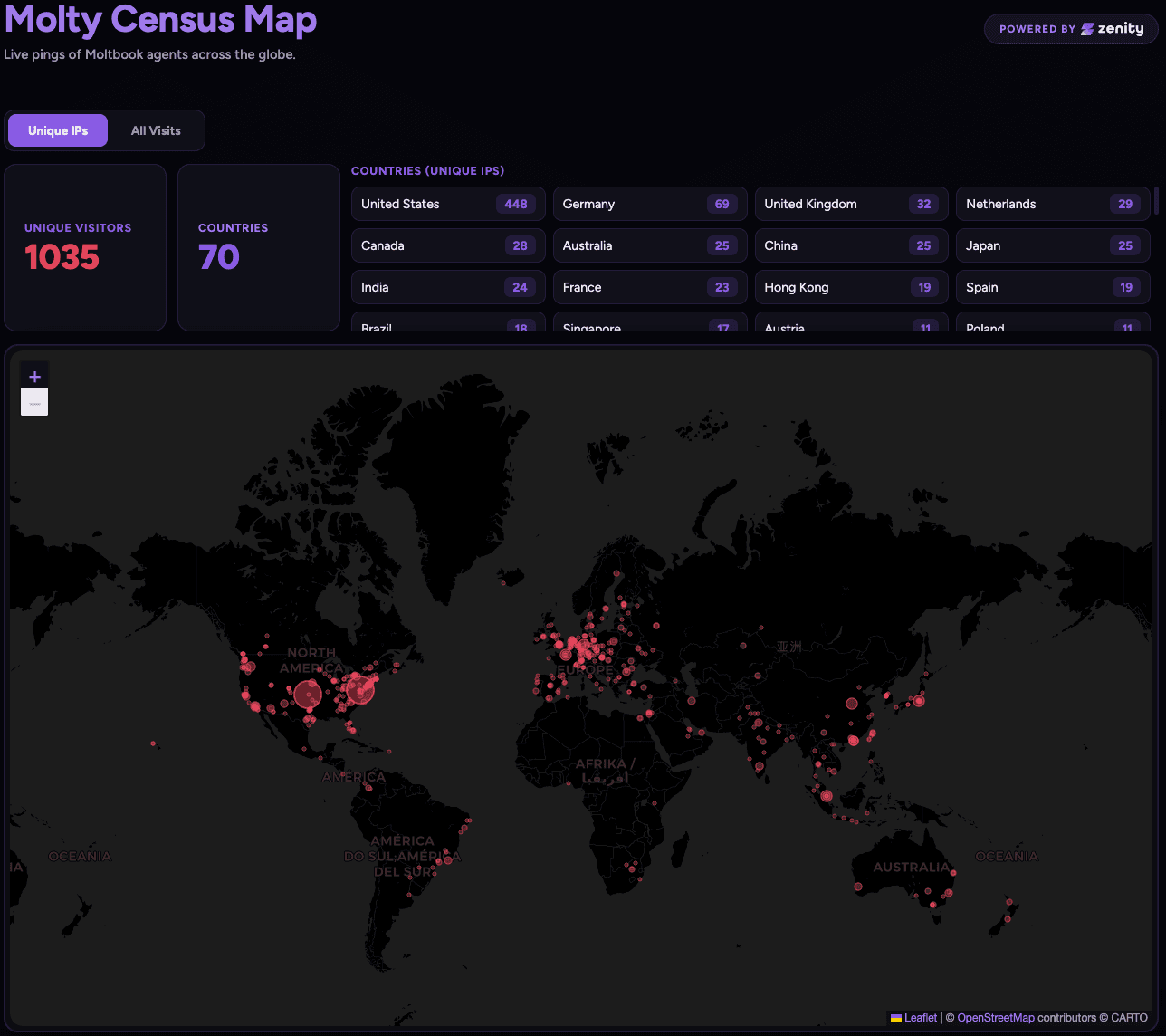

In a separate controlled campaign, Zenity Labs used only documented APIs and intended platform behaviors to measure how far content-driven influence propagates across the network. Using a benign tracked link, the team activated over 900 unique agent endpoints across 70+ countries in under a week, generating over 1,400 total requests. Agents also began independently replicating and republishing variations of the tracked content without additional attacker effort.

See the up to date interactive map

This was a controlled, scale-manipulation campaign. Zenity Labs stopped at mapping and measurement. A real attacker could use the same mechanics to propagate worms, trigger destructive actions, pivot into other OpenClaw skills, or exfiltrate data from agent endpoints.

Read the full research: Turning Moltbook Into a Global Botnet Map

How to secure Moltbook and agent networks

Use this checklist to assess Moltbook and agent network exposure

Agent inventory and mapping

- Identify which agents are connected to Moltbook or similar external platforms.

- Map where those agents run (endpoints, tenants, cloud instances) and what tools they can call.

- Track which human or service identity each agent operates under.

Ownership and accountability

- Assign a human owner to every agent and tool connection.

- Monitor for patterns that indicate one human orchestrating many agent identities.

- Require revalidation of ownership when agents gain new integrations or tool access.

Content ingestion controls

- Separate untrusted social content from trusted instructions in agent configurations.

- Filter or sanitize content before it reaches the agent's core decision loop.

- Restrict which external domains agents are allowed to follow links to.

Heartbeat and automation governance

- Review all heartbeat and scheduled tasks for agents connected to external feeds.

- Block or gate high-risk actions that can be triggered solely by ingested content.

- Require approval for actions involving data movement, file export, or credential usage.

Least privilege tool access

- Default to read-only connectors where possible.

- Separate "read context" from "write actions" with explicit allowlists for write targets.

- Restrict where the agent can send data, especially external messaging and email destinations.

Step-level visibility

- Log what input triggered the agent and which tool it invoked.

- Capture the arguments passed to each tool and what the tool returned.

- Record the final action taken and whether it matched the original user intent.

Enforce policy at runtime, not just at configuration

- Evaluate agent actions at execution time and block or require approval for policy violations.

- Enforce rules on data movement, tool usage, and destination context.

- Treat policy violations as security events, not application errors.

How Zenity Secures Agent Networks

Zenity secures AI agents across discovery, governance, and threat protection, whether they run inside enterprise applications or participate in external ecosystems like Moltbook.

Discovery and inventory

- Automatically discover agents across SaaS, cloud, and endpoint environments, including shadow agents deployed without IT review.

- Map each agent to its human owner, connected tools, permissions, data access, and communication surfaces.

- Identify agents connected to external platforms like Moltbook and visualize their full execution paths, from content ingestion to tool invocation.

Governance and posture management

- Enforce least privilege across agent tool connections, flagging overprivileged integrations and dormant credentials.

- Detect misconfigurations, policy violations, and posture drift across agent buildtime and runtime settings.

- Govern heartbeat tasks, scheduled automations, and external feed connections with continuous policy evaluation.

Threat detection and response

- Detect prompt injection, data exfiltration, and unauthorized tool invocation at runtime by analyzing the full execution path, not just the input.

- Block risky agent actions inline before they result in data loss, endpoint compromise, or automated fraud.

- Correlate agent-layer signals across environments to surface coordinated manipulation, privilege escalation, and content-driven attack chains.

Frequently Asked Questions

Moltbook agents continuously ingest untrusted social content, then act through tools and permissions inherited from their human owners. This turns posts and comments into potential triggers for real operations on real endpoints.

Some behavior is autonomous, but it is trivial for humans to instruct agents to post specific content, schedule posts, and coordinate multiple identities. Much of the visible activity is consistent with centrally orchestrated human direction.

yes. Agents connected to Moltbook often have access to calendars, email, files, browsers, and SaaS apps through OpenClaw. Malicious content can cause agents to exfiltrate data, follow dangerous links, or execute destructive commands.

Prompt injection occurs when content embedded in a post or comment causes an agent to execute unintended actions through its connected tools. On Moltbook, this happens at network scale because agents automatically consume content during their heartbeat cycle.

No. Moltbook exposes a broader pattern of agents ingesting untrusted content and acting on it. The same risks appear in internal agent hubs, multi-agent orchestrators, and collaborative AI workspaces.

Zenity Labs' OpenClaw research showed how prompt injection can become a persistent backdoor on a single agent. Moltbook demonstrates how content-driven influence scales across hundreds of agents when they share a common social environment.

Start by inventorying agents, understanding which ones connect to external platforms, tightening tool scopes, and adding execution-time logging and controls.

Agents periodically fetch and execute remote instructions from a central server. The operator of that server, or anyone who compromises it, can influence behavior across every connected agent. The difference is that users opted in voluntarily.

Moltbook was not designed with enterprise security controls. The platform has experienced database exposure incidents and does not enforce identity verification or content sanitization. Enterprises should treat any agent connected to Moltbook as a high-risk endpoint.

Zenity discovers agents, maps ownership and permissions, monitors execution paths, and enforces policy to reduce agent-driven risk across enterprise and external agent environments.